News

The Latest Updates from the Frontier of AI, GenAI Video, and Immersive Experiences

- January 3, 2026

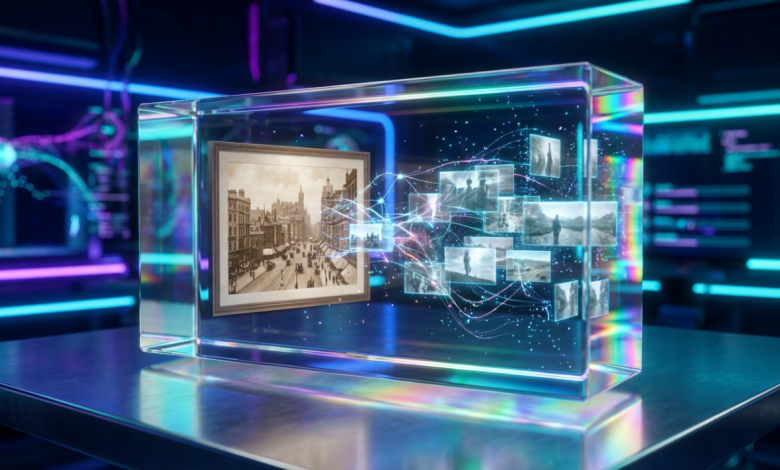

THE EVOLUTION OF AI VIDEO GENERATION: FROM STATIC CREATION TO INTELLIGENT VISUAL SYSTEMS

This article analyzes emerging trends in AI video generation, focusing on image-first workflows, multi-model pipelines, and real-world creative use cases for professionals.

AI video generation has moved far beyond simple animations or text-driven clips. Today, it is evolving into an intelligent visual system that understands motion, emotion, context, and creative intent. Brands, creators, and designers are no longer asking whether AI-generated video is usable—but how it can be integrated into real production workflows without sacrificing quality or control.

This article explores the structural shift happening inside AI video generation technologies, why traditional “prompt-only” creation is no longer enough, and how new platforms are bridging the gap between automation and creative direction. Along the way, we will reference tools like Genmi AI as examples of how multi-model ecosystems are shaping this new phase of visual creation.

By the end, readers will gain a practical understanding of emerging trends, real-world use cases, and strategic considerations for adopting AI-driven video and image systems responsibly and effectively.

From One-Off Clips to Visual Systems

Early AI video tools focused on novelty: short clips, experimental motion, or surreal visuals generated from simple prompts. While impressive, these outputs often lacked consistency, scalability, and creative control—critical requirements for professional use.

The current wave of AI video generation emphasizes systems, not isolated outputs. These systems combine:

- Image-to-image foundations

- Image-to-video motion layers

- Text-guided narrative logic

- Style, lighting, and motion parameters

This architectural shift allows creators to iterate, refine, and reuse assets instead of generating disposable visuals.

Key Tips: Why Image-to-Image Is Becoming the Foundation

One of the most important trends in AI video generation is the rise of image-to-image workflows as a starting point rather than an optional feature.

Instead of generating everything from text, creators increasingly:

- Define a visual baseline (character, product, environment)

- Lock key stylistic attributes

- Animate or extend the image into video

Platforms that support strong image-to-image pipelines—such as those highlighted in Genmi AI’s image-to-image workflows—enable far greater consistency across campaigns, episodes, or brand assets.

This shift is especially valuable in advertising, game asset creation, and social media storytelling where visual continuity matters.

The Rise of Multi-Model Creative Pipelines

Another major trend is the integration of multiple AI models within a single creative environment. Instead of forcing one model to do everything, modern platforms allow creators to select different engines for different tasks—such as realism, motion fidelity, or stylistic abstraction.

This mirrors how professional production works in practice: no single tool excels at every stage.

Some ecosystems now combine cinematic video models, experimental motion engines, and specialized utilities—like watermark-free generation—into unified pipelines. For example, tools that address issues like post-generation cleanup (e.g., watermark handling) reduce friction that previously made AI video impractical at scale. A practical reference is Genmi’s approach to Sora watermark removal, which reflects a broader industry push toward production-ready outputs rather than demos.

Practical Techniques: AI Video in Real Creative Scenarios

AI video generation is increasingly used in scenarios where speed and iteration outweigh traditional perfection-first pipelines:

- Ad concept testing: Generating multiple visual directions before committing to full production

- Creative prototyping: Visualizing ideas for pitches, storyboards, or internal alignment

- Social media content: Producing short-form, high-impact visuals with controlled motion

- Design exploration: Testing lighting, composition, and mood without reshoots

A common workflow involves generating a base image, animating subtle motion, and refining pacing—rather than creating long-form videos from scratch. This hybrid approach reduces creative risk while preserving flexibility.

Core Keyword in Context: Where AI Video Generation Is Headed

As AI video generation matures, the competitive edge will no longer come from raw generation speed. Instead, it will depend on how well platforms support decision-making, control, and integration into existing creative processes.

The next generation of tools will prioritize:

- Predictable outputs over randomness

- Modular workflows over one-click generation

- Creator agency over full automation

Educational resources and expert breakdowns—such as in-depth guides on evolving video models like those discussed in this Runway Gen analysis—highlight how creators are learning to work with AI rather than around it.

Best Practices for Adopting AI Video Tools

Before integrating AI video into professional workflows, teams should consider:

- Define usage boundaries: Know where AI adds value and where human refinement remains essential

- Prioritize consistency: Lock visual references early to avoid brand drift

- Evaluate export readiness: Ensure outputs meet platform and resolution requirements

- Plan for iteration: Choose tools that support refinement, not just generation

AI video works best when treated as a creative accelerator—not a replacement for judgment or strategy.

Conclusion

AI video generation is no longer about novelty or automation alone. It is evolving into a structured, system-level capability that supports real creative work across advertising, design, storytelling, and digital production.

By understanding emerging trends—such as image-first workflows, multi-model pipelines, and production-ready outputs—creators and teams can adopt AI tools with confidence and clarity. The real opportunity lies not in generating more content, but in creating better, faster, and more intentional visuals with the right balance of intelligence and control.

By Erika Balla The AI Journal

Destinate creates professionally produced cinematic AI videos for major openings, launches, and pre-debut campaigns. Using a hybrid approach that blends GenAI, real-world assets, and creative direction, we help brands bring destinations, developments, and experiences to life before they open.

QUICK LINKS

SUBSCRIBE

To Our Ready, Player, Travel Newsletter Today

LOCATIONS

- NORTH AMERICA Los Angeles, USA

- LATIN AMERICA Mazatlán, Mexico

- ASIA Hong Kong, SAR